In Apple’s March “Peek Performance” Event, Apple revealed to the world their latest and greatest Apple Silicon to date: the M1 Ultra in, for the first time in over a decade, a brand new Mac, the Mac Studio. During the presentations, Apple made many bold claims such as astounding 800 MB/s memory bandwidth and performance that may beat top of the line cards like the Nvidia’s GTX 3090. As Arthur C. Clarke would say: “Extraordinary claims require extraordinary evidence”. Many are skeptical that an integrated SOC that is focused on laptops can beat out full power-no-limits desktop components like the GTX 3090. We investigate such claims.

Executive Summary:

- M1 Ultra is Apple’s latest in line of Apple Silicone first introduced in “Peek Performance” event.

- Apple touts that it is very competitive with Nvidia top of the line card, the GTX 3090

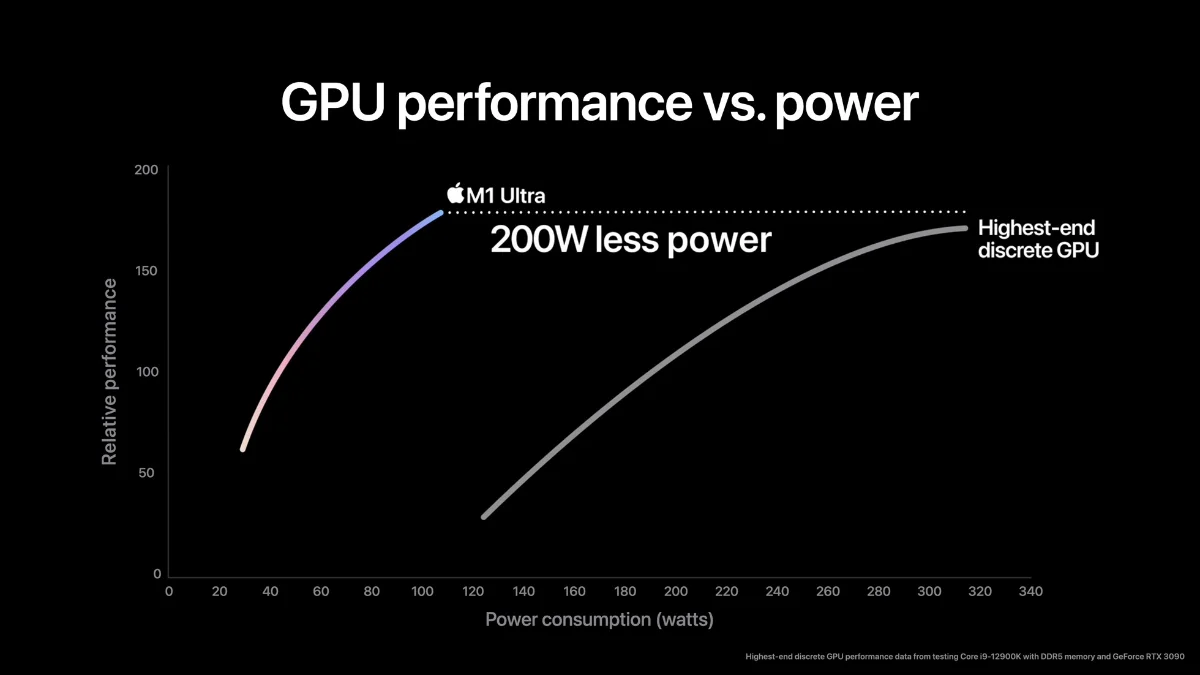

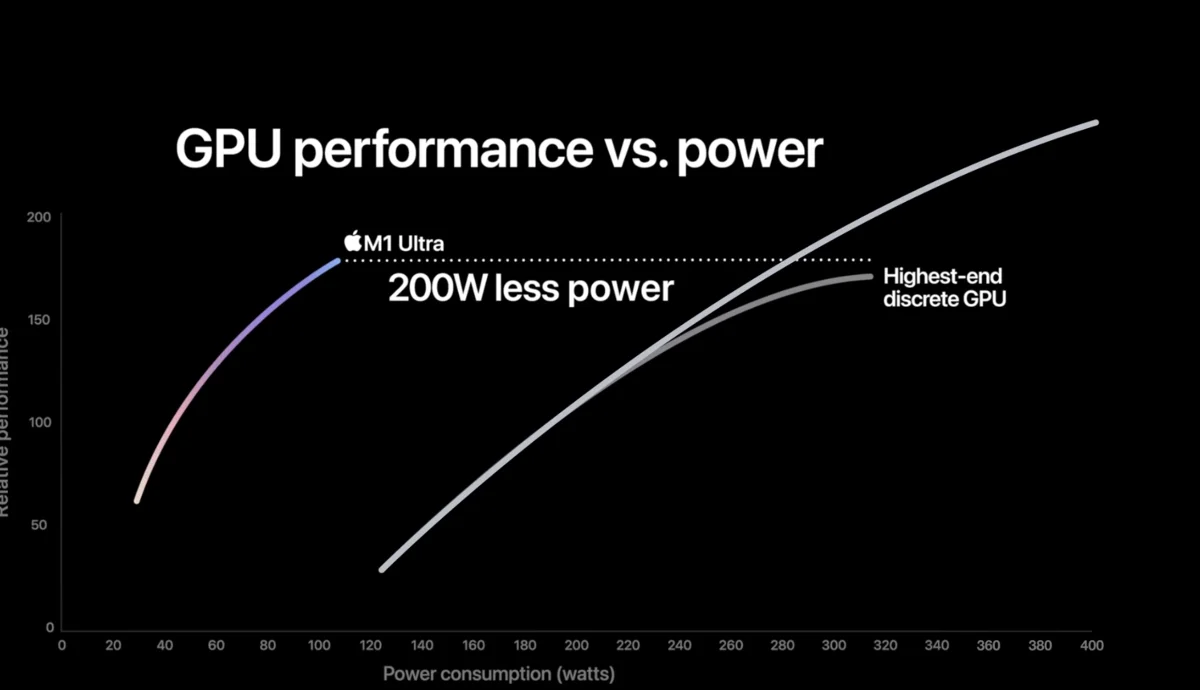

- Base on the graph, the M1 Ultra is slightly more performant than the GTX 3090 while consuming 200 watts less power

- In reality, the GTX 3090 is more performant because the card can easily use 300 to 300 watts of power and with future cards consume even more power, it expected to be a market leader in the near term

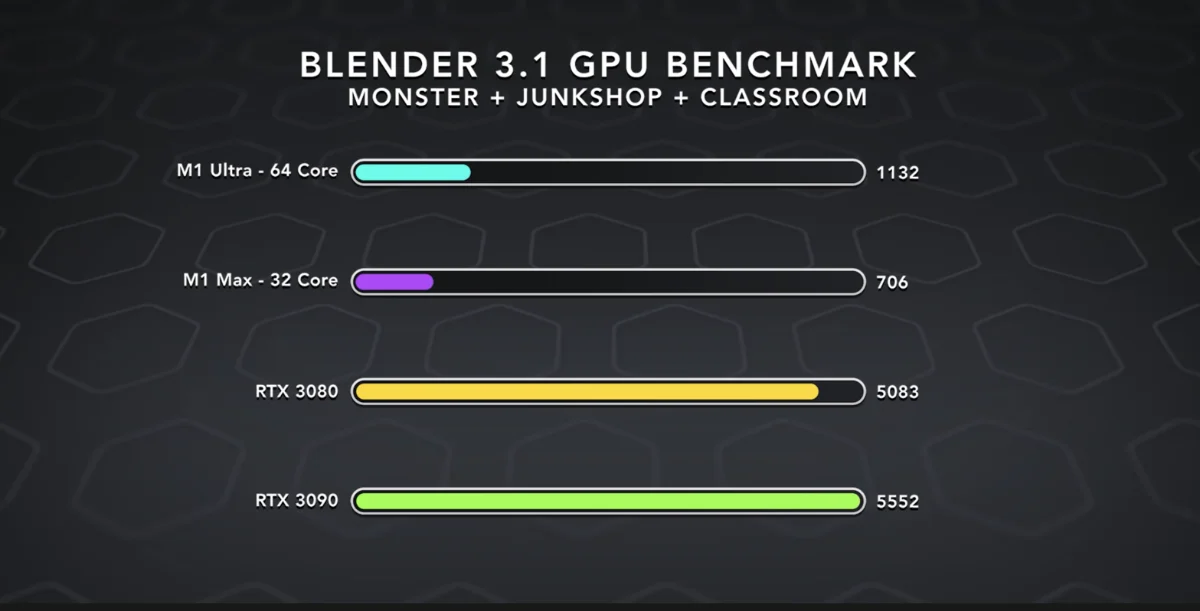

- Another performance factor that should be aware is software side optimization that is being done to take advantage of the M1 Ultra. Benchmarks software shows numbers that are close, but certain apps like Blender struggle to use M1 Ultra power

- Most games are not optimized to use MacOS in general and M1 silicon in general. Tests shows that games are not utilizing 100% of M1 Ultra power when using the game.

- Overall, the M1 Ultra is competitive to a RTX 3090, as long your software is optimized for it, like Apple’s Final Cut Pro.

M1 Ultra

For a more detailed look on the M1 Ultra, you can read our coverage here. We also compare the M1 Ultra against Intel’s latest Core chips here.

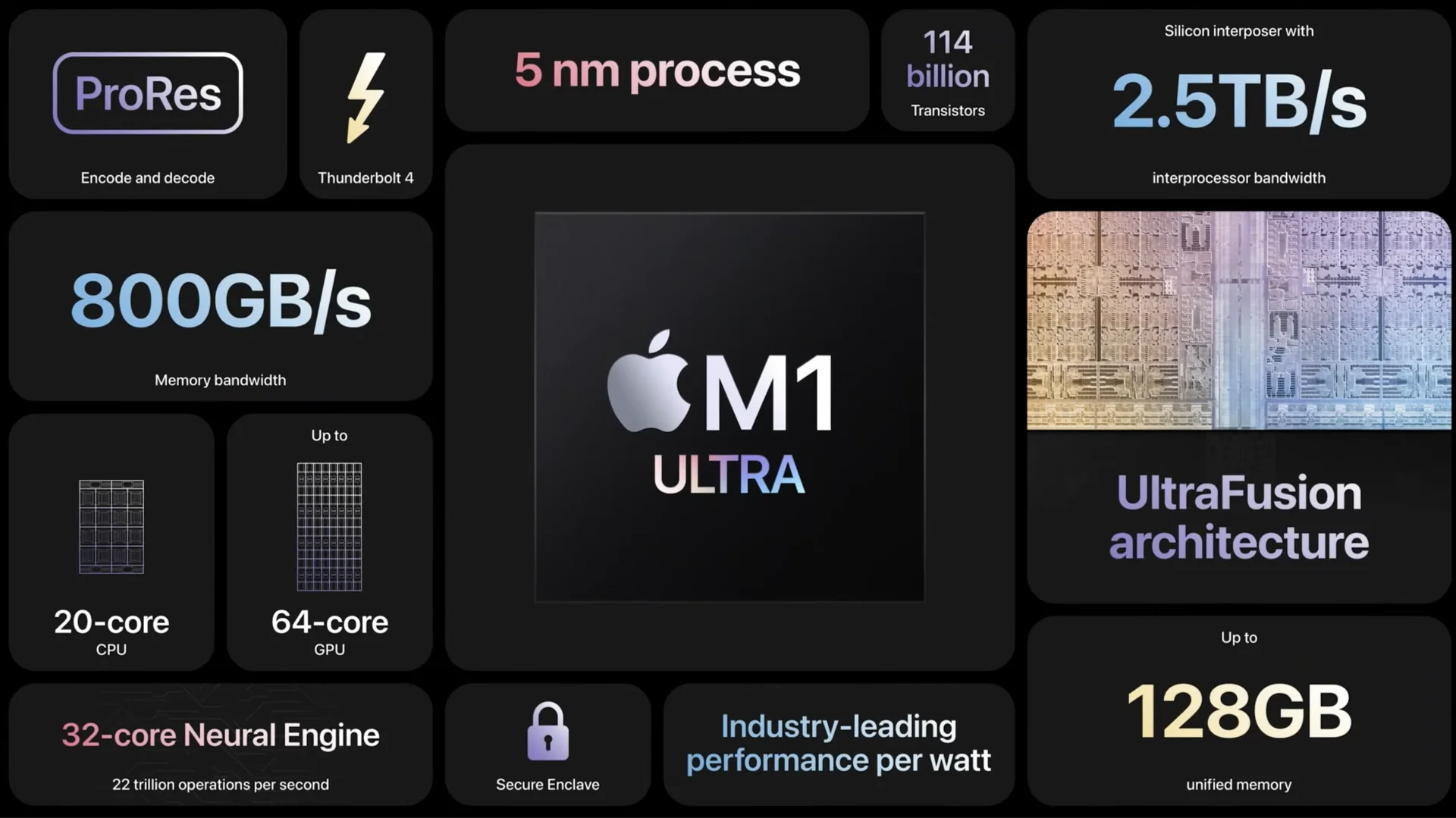

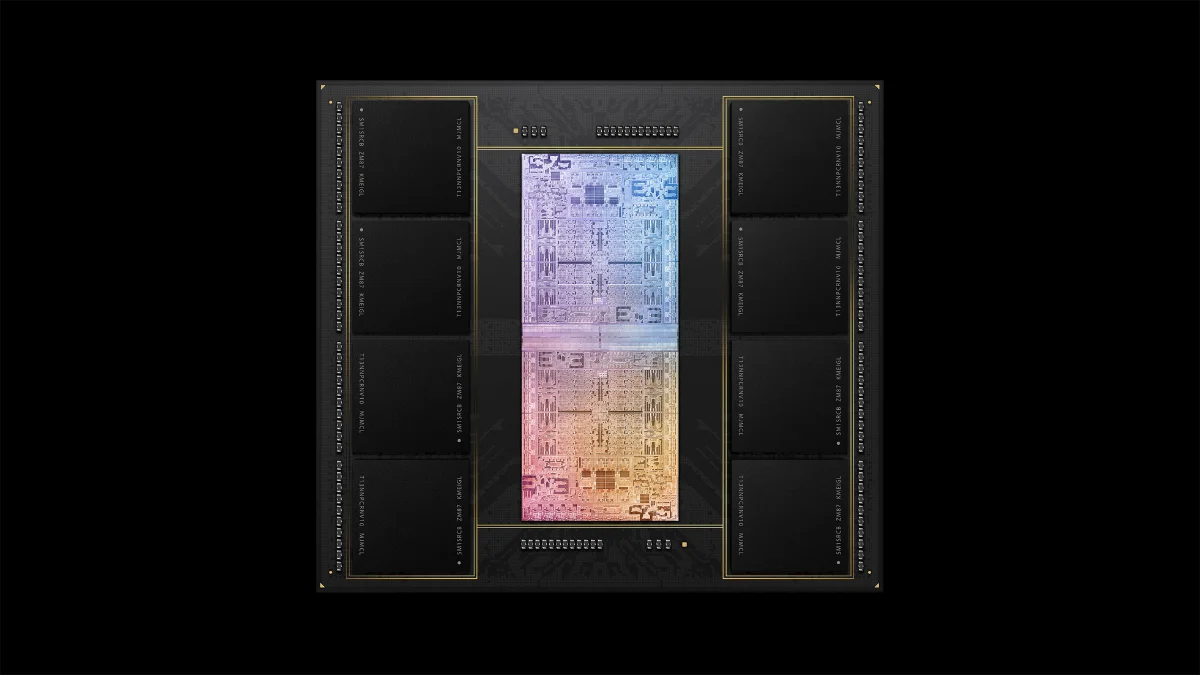

The M1 Ultra is the latest in a long series of Apple’s Silicon development which started its life as the A4 chip in the first generation iPad. Over a decade of development cumulated in this: 114 billion transistors where the size of each transistors is around 5 nanometers, which is the size of two strands of human DNA and can do around 5.3 TFLOPs double precision calculations per second. To put things in perspective, ASCI White was the world’s most powerful supercomputer in 2000 with a performance of 4.93 TFLOPS which was used to simulate nuclear weapons testing. The M1 Ultra can beat that supercomputer’s performance whale costing only $4,000.

There is a lot to be said about the M1 Ultra specs: 20 compute cores, two Neural Engines with 32 neural cores total and a massive 64 core graphic engine which is essentially double of the M1 Max that precedes it. There’s also four Media engines which enable feats like handling around 16 concurrent 8K ProRes 422 streams.

Underneath the surface, is where the magic happens. No matter how powerful the hardware can be, software is what the source of the magic comes from. To this end, the software and hardware integration that Apple is famous for comes to shine. Apple’s approach to hardware drivers is significantly different from Microsoft Windows. In Microsoft, hardware manufacturers are basically responsible for the hardware drivers and as long as they adhere to the library template, everything is kosher. The result is your experience with your hardware varies from one vendor to another. Apple meanwhile like to take the top down approach: they work closely with hardware vendor to ensure everything works to a T. And sometimes, Apple demands some hardware changes and even low level access to get the performance they want. Because of this, there are clashes with how Apple and the other vendors approach the problem. The most famous is between Nvidia and Apple which results that Apple only carries AMD graphic chips when under Intel’s umbrella.

For Windows and Linux, there are basically endless ways to interact with the graphic cards: In Windows, DirectX provides the general libraries to access the card and hardware vendors basically provide the necessary header files for Windows to interact with the graphic cards. There’s also 3rd party solutions like OpenGL and Vulkan which are gaining ground as a popular choice. In the Apple ecosystem, you only talk to the hardware using Metal.

The reason that I brought up the choice of driver and hardware solution is because it can affect software performance if the software is not optimized for the platform. In the case of Adobe, when Apple made the switch from Intel to Apple Silicon, the Adobe apps like Photoshop and Premiere Pro were not yet optimized for the new M1 chips, resulting in a far lower performance metric against their Windows counterpart. Optimizing software after a platform change will take time and effort, as shown by Adobe, a multi billion company with a significant user base in Mac, took months to optimize their software suite to take advantage of the new Apple Silicon features.

Nvidia GTX 3090

Nvidia is one of the legendary companies that basically helped build Silicon Valley together with other celebrities like Apple, Microsoft, HP, Dell, Sun Microsystem and others. While some companies like Sun got bought over and some rose up into giants like Google and FaceBook, Nvidia is always a constant presence in the Silicon Valley show. Nvidia made their mark in discrete graphics, swallowing up competitors like 3dfx and becoming the de facto leader in discrete graphics.

The Nvidia GTX 3090 is currently the ultimate expression of Nvidia technological prowess. It is the most powerful consumer graphic cards right now and retailed for around $1,499 although because of the great pandemic which causes supply chain issues and people using GPU for crypto-currency mining cause the price to jacked up to $2,500. Nevertheless, it is one impressive card: with over 10,000 CUDA cores, GDDR6X memory and 24GB Video RAM. And the card is quite power hungry: it reportedly needs around 390 to 400 watts to power the card and some speculate that the 3090 Ti, an update to the 3090 would easily eat 400 to 500 watts. That’s not discounting some rumors that the next gen GTX 40 series will eat around 600 to 800 watts of power.

Benchmark results

During the “Peek Performance” presentation, Apple claims that the M1 Ultra can achieve higher performance compared to the RTX 3090. Furthermore, Apple claims that the M1 Ultra achieves the same performance level while using 200 watts less power than the RTX 3090.

As people have shown: Apple’s claim is a bit misleading. First, the power figure shows that the Nvidia 3090 will stop at around 300 watts. In reality, the Nvidia will happily go from 300 watts to 400 and beyond. With overclocking and liquid cooling, you can basically push the card far higher than what Apple claims. So overall, the RTX 3090 is still indeed the more powerful graphic cards, but Apple’s speciality cores like the Media engine givers the M1 Ultra an edge in optimized workflow like video production.

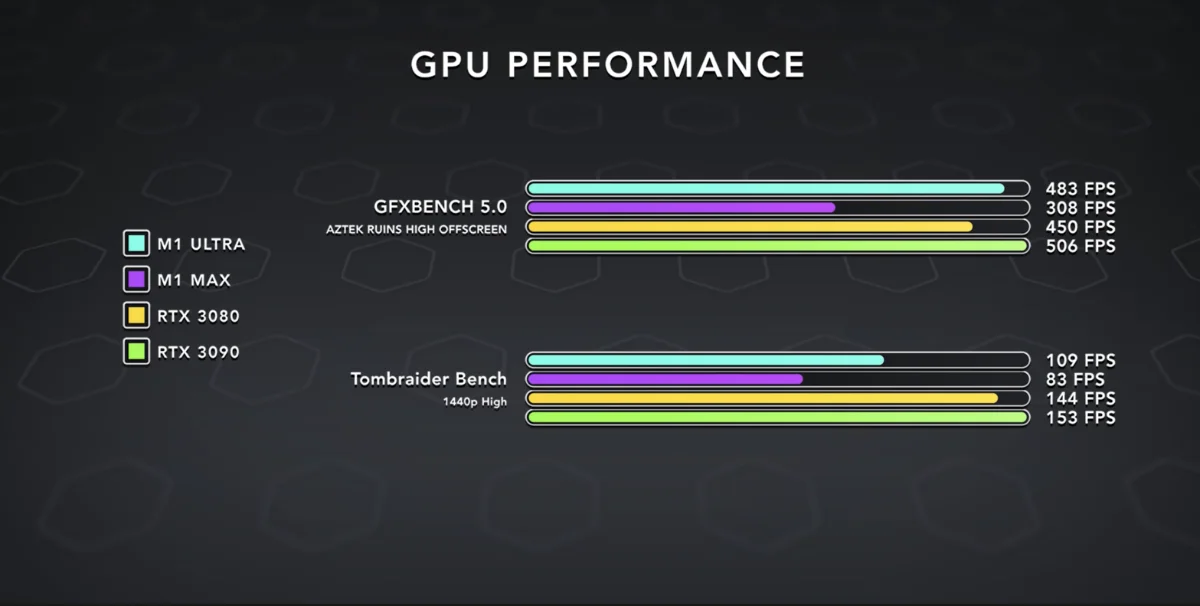

Another is the optimization issue, which is shown best by Dave2D. In GFXBench 5.0, the M1 Ultra comes close, but not beating the Nvidia GTX 3090. In the Tomb Raider benchmark, an app which runs on Rosetta 2 translation layer, the performance is significantly degraded, unable to compete with a cheaper card like the RTX 3080.

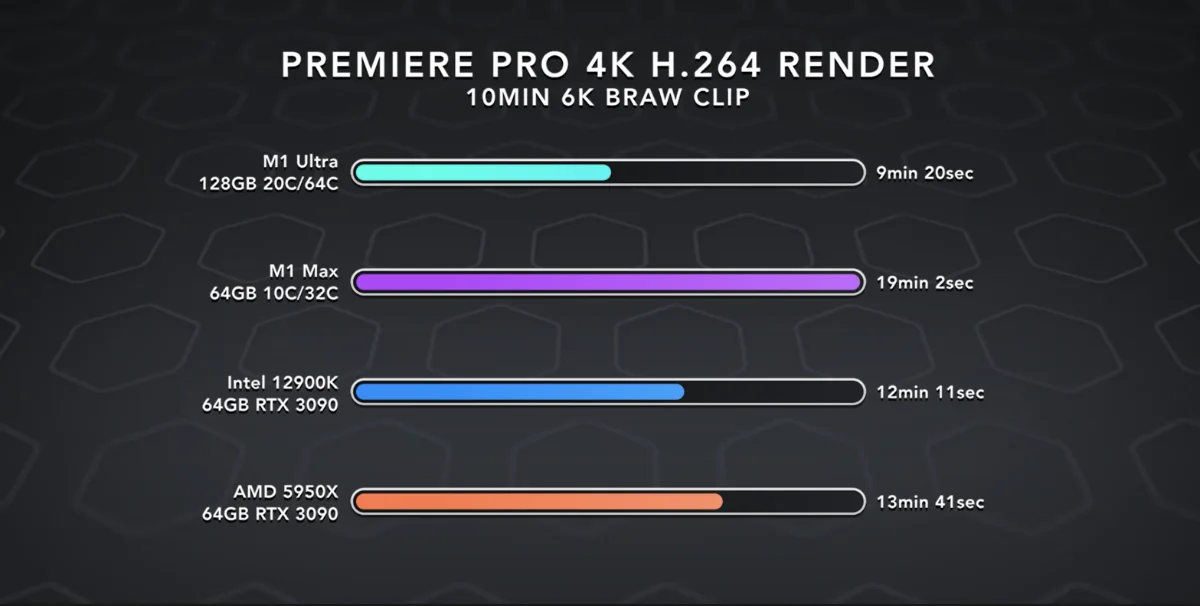

In optimized up, as shown in Adobe Premiere Pro, the M1 Ultra can easily beat the GTX 3090 while using far less power than the Nvidia’s discrete solution. This shows that having things optimized is as important as having the most powerful hardware, because only with the right software and right hardware combo, you will get the maximum performance possible.

Conclusion

In the near term, Nvidia will still be the graphic cards to beat in terms of raw performance, gaming and in certain workflow. But, the computing world is never static, and Nvidia will have to contend with a new player in town. A player who is highly motivated, talented and has a very deep pocket.

Apple on the other hand, although not an outright winner in this debate, has shown some great promise and greater ideas. Apple has always been a trendsetter and the M1 Ultra is no different. Ideas like workflow optimization now take precedence instead of power at all cost. Cores that do specialized but in demand tasks, or accelerators will now take center stage as M1 Ultra has shown you can do more with less. Unified memory will bring more performance on the table specially when you have optimized both the graphic cards and the underlying operating system to take advantage of such situations. Benefits of unified memory become more apparent when working on large project files.

The most impressive part is things that are in the pipeline that we know are coming imminently. The M1 Ultra is not even M1 series final form as Apple would still field an even more powerful chip on their flagship Mac Pro. Nvidia is expected to release their next generation graphic cards which many will dubbed the GTX Series 40 which will unleash even more power. What a time to live in.