An operating system is a special kind of software that sits on your computer. The most basic function is to act as the interface between your computer hardware and computer software. Examples of operating systems on the market are Microsoft Windows which runs on PCs and Apple, macOS which runs on Macs, iOS which runs on iPhones, iPadOS which runs on iPads, and watchOS which runs on Apple Watch

When Apple announced Vision Pro during WWDC 2023, Apple also introduce a new Operating System called visionOS. Just like macOS, iPadOS, and watchOS, visionOS runs on Vision products and acts as the interface between Vision Pro hardware and the apps that run in Vision Pro.

We explore what we know about visionOS from the demo and also the simulator. Since as of this writing (Jun-2023), Vision Pro is around 6 months from launch, things can change and we will update this article accordingly. This guide covers mostly from a user’s perspective, but we also touch a bit from a developer’s perspective.

Concepts

Before going into deep dive into the visionOS, it is important to understand how the Vision Pro works, Apple’s vision of the device, and how Apple builds visionOS around that vision. Now that’s a lot of visions.

Here are a few things about the device Vision Pro:

- It’s not a VR headset: Although fully capable to be immersive into Virtual Reality, the headset is not the primary setup for that role. Instead, when you first wear the headset, you see the outside world like wearing a pair of ski goggles.

- It’s not an AR headset either: A VR headset is where you look at your surroundings and suddenly a few metadata appears to augment your surrounding. Nope, Apple isn’t doing that.

- It’s a computer. Spatial computer: This is the “new” paradigm that Apple is trying to make. The Vision Pro is a computer like your Mac, iPhone, or iPad, but things are arranged spatially around your surroundings. As Steve Jobs could say, it’s what headphones are to your speakers but for displays.

Interface concepts in visionOS

How here’s how visionOS completes Apple’s vision of spatial computing.

- It’s a computer: As stated before, Apple is not making a virtual reality headset that you go away into outer worlds and be lost in that environment. This is a computer like your Mac or iPhone where you can consume content or be productive. It’s a motorcycle for your mind.

- It works like your iPad or iPhone: To go around the visionOS, it works similarly to how would you use Apple’s best-selling product: the iPhone. There’s a Home Screen and it lay out your apps and you run them like how you would on a computer.

Digital Crown on the Vision Pro - The Digital Crown is the new Home Button: Press the Digital Crown and you go straight to the Home Screen. The Digital Crown is the new home button.

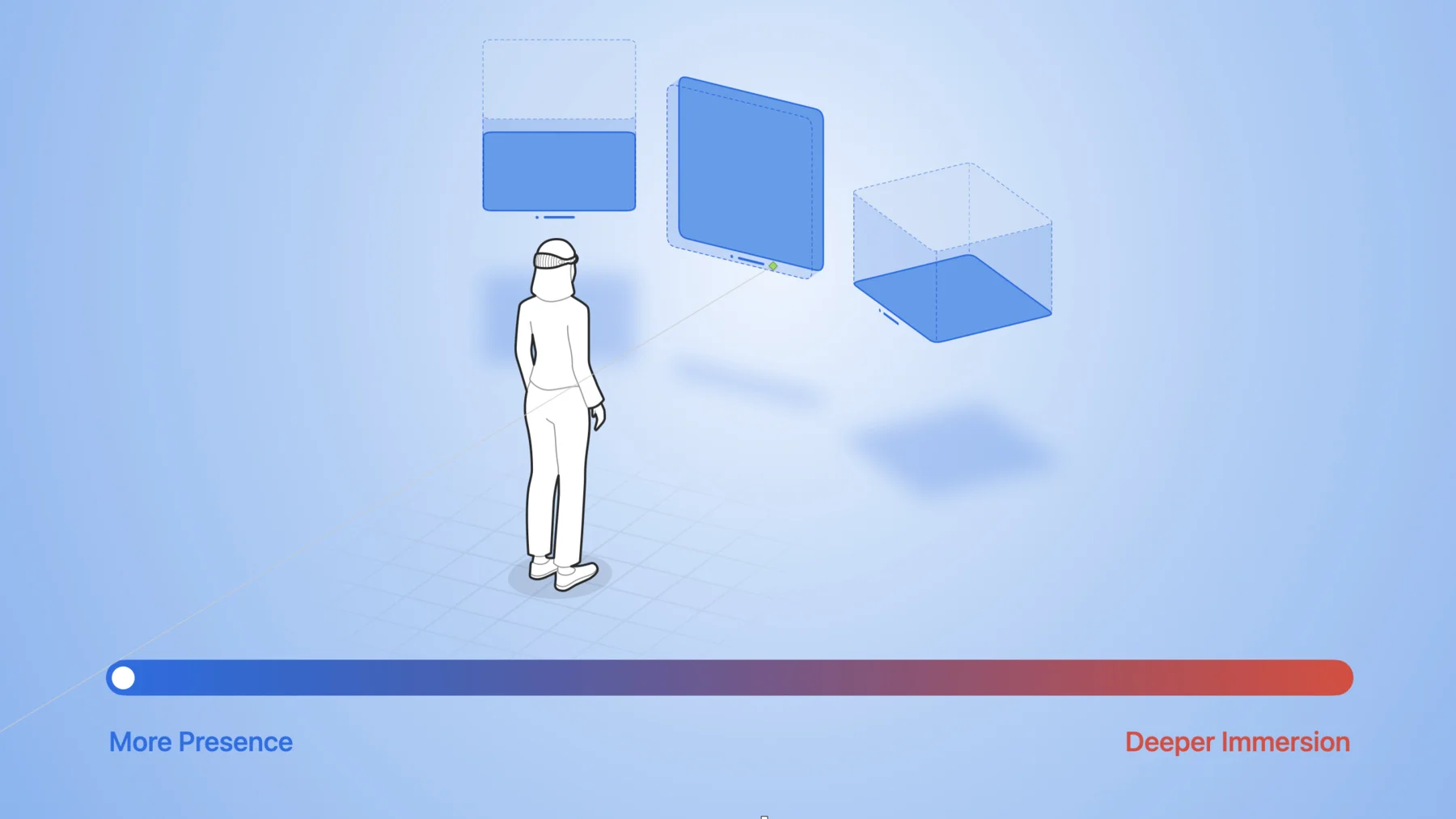

- Control your immersion level: By default, Vision Pro is in pass-through mode, but if you turn the Digital Crown, you will change your immersion level.

- Your hands and eyes are the new mouse: In a Mac, you have a mouse to move the cursor. In visionOS, Vision Pro tracks your eye movement and “moves” the cursor. It is reported that the accuracy of the cursor is “telepathic”. On a Mac, you click on a mouse to select an item. In visionOS, external cameras track your hand and you gesture a “click” by tapping your index and thumb together.

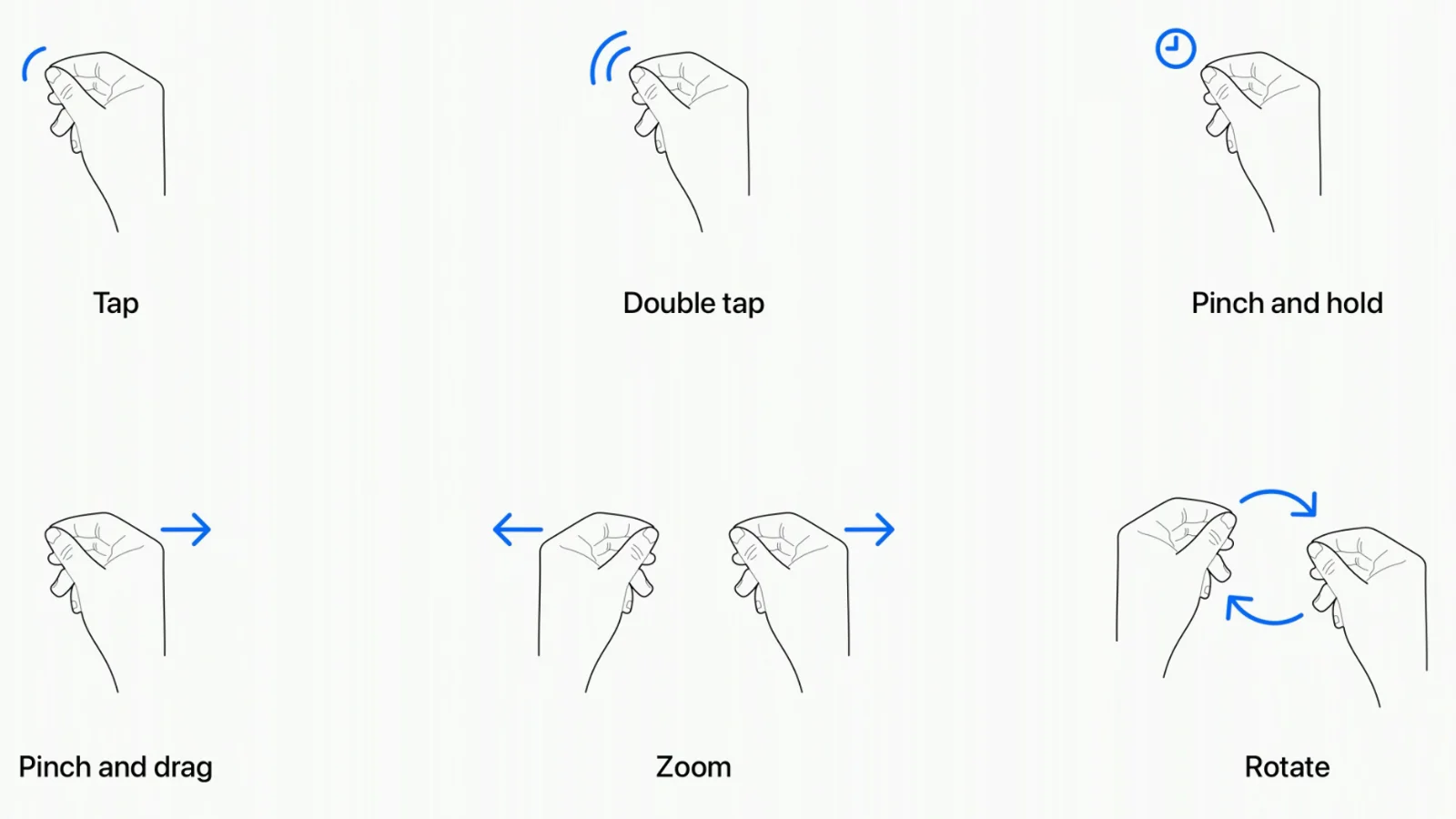

- There are other hand gestures too: Just like in macOS there’s double-click, click and hold, and if you own a Magic TrackPad, there’s a pinch to zoom, visionOS have those similar gestures too.

Apps concepts in visionOS

- There’s a Home Screen: Just like iOS and iPad, there’s a Home screen that will display apps in a grid structure. To get here, you just press the Digital Crown.

Example of multiple windows floating around you

- Your app floats!: So now you have the app and gestures right, where do the apps sit? Well, it floats around you. You can open multiple apps around you and it will all work in the background. Just like having multiple windows around.

- Multiple apps running at once: Just like macOS where you have multiple apps running at once floating in that desktop, you can have multiple apps floating around your space.

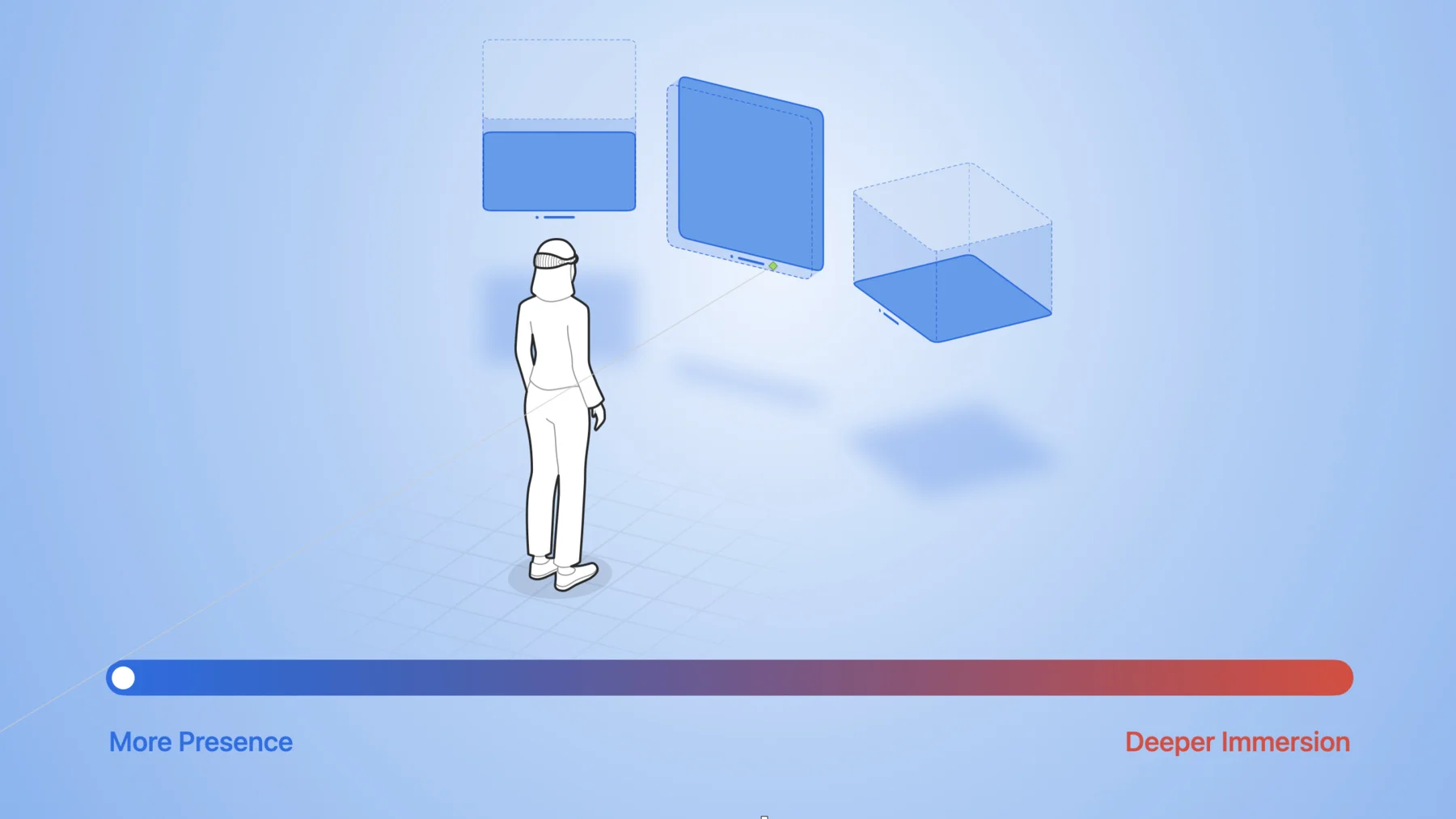

How apps in visionOS interacts, flat, volume and space. The immersion level is adjusted using the digital crown. - Your apps will be displayed in 3 ways: According to visionOS documentation, apps in visionOS will be displayed in 3 distinct ways: windows, volume, and spaces. Windows is basically how you would usually experience apps in macOS and iOS. Volume is when the windows have some depth and created a box. You can put 3D virtual objects in that box. And spaces are when your apps need to be fully immersed.

Full Spaces mode like watching movies

Inputs

Out of the box, your input device for the Vision Pro will be your eyes and hands.

Default Inputs

As stated, your eyes and hands will be the default input.

- Your eyes: Using eye tracking technology, visionOS moved the cursor to where you point your eyes.

Basic gestures provided by Apple. You can customize gestures - Your hands: Use gestures to “click” your cursors.

- Keyboard: Based on the demo, there is a pop-up keyboard when

External Inputs

- Bluetooth Keyboard: From the demo, you can add a Bluetooth keyboard to visionOS just like iOS and iPadOS.

- Bluetooth Mouse/Trackpad: From the demo, there is a function for using the Magic Trackpad like pinch to zoom, slide, and others.

Accessibility

Although not shown on the demo, WWDC sessions do touch on accessibility options for visionOS users, which includes:-

- Pointers: You can use an external mouse to point at an icon.

Ecosystem Integration

One aspect that sets visionOS and Vision Pro apart from other VR/AR headsets is the strength of the Apple Ecosystem. One of the great features that are demoed is integration with other Apple products

- Mac integration: In the demo, while wearing your visionOS device, you open up a Mac and it detects that Mac is yours. A new window will pop up in your vision OS and bam, you have a floating 4K display of your Mac.

- AirPods: Of course the AirPods will connect with your visionOS device

- iCloud apps: iCloud apps like FaceTime, Notes, Photos, and many more are integrated with your visionOS device. Other 3rd-party apps that utilize Apple’s iCloud backend can do the same too.

From Developer’s Perspective

Looking under the hood, it’s ingenious how Apple has “taught” developers how to develop apps for visionOS without revealing anything. Of course, as of now, visionOS is still under development and things can change, but here is what we learn based on WWDC information.

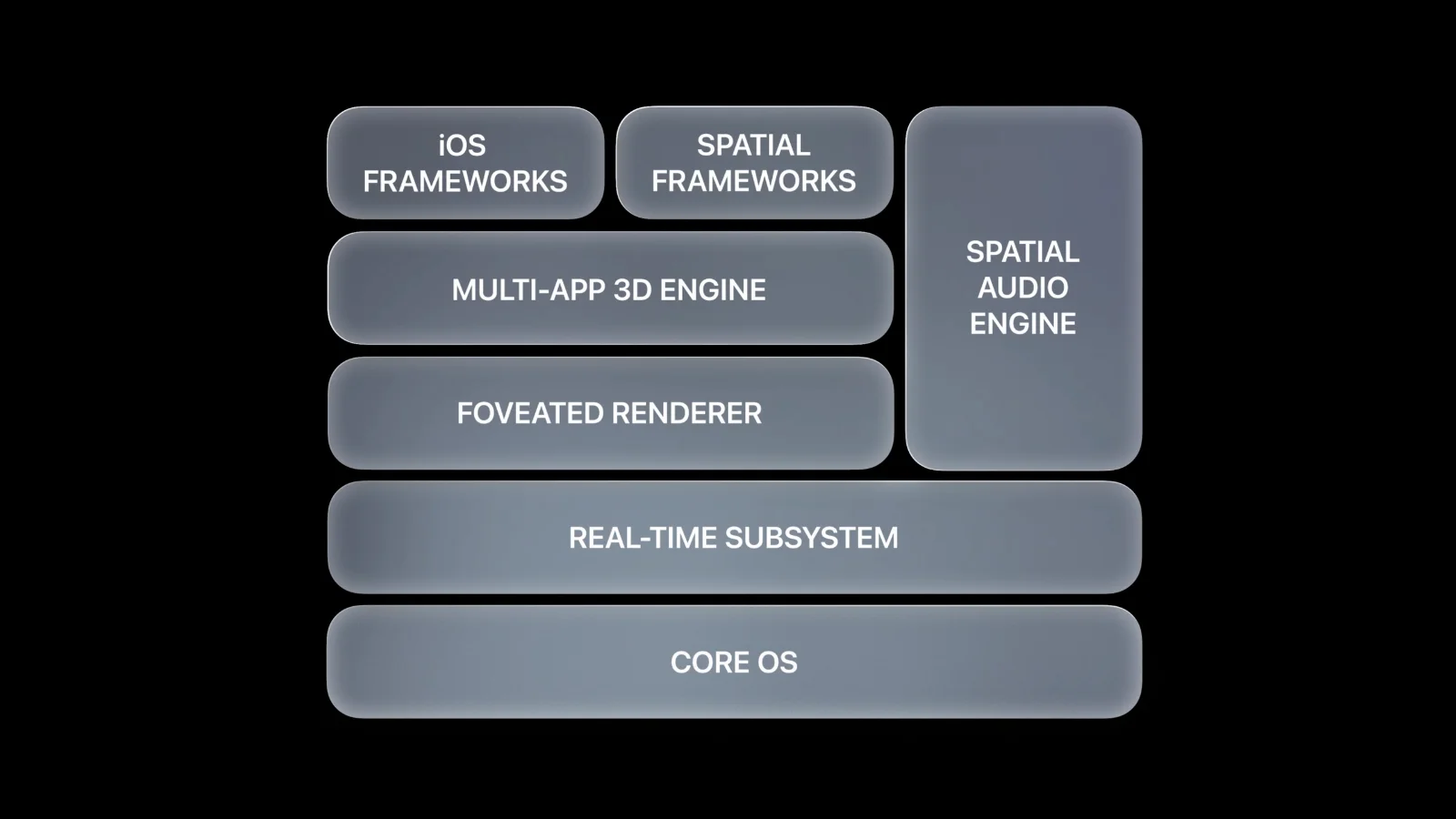

- visionOS is based on macOS: Just like iOS, iPadOS, watchOS, and others are based on macOS, which in turn are based on NeXTSTEP, which in turn is based on BSD, visionOS is also based on macOS. The benefits are clear, macOS is a superset of all other Apple operating systems and modified (and neutered) to meet the needs of specific devices.

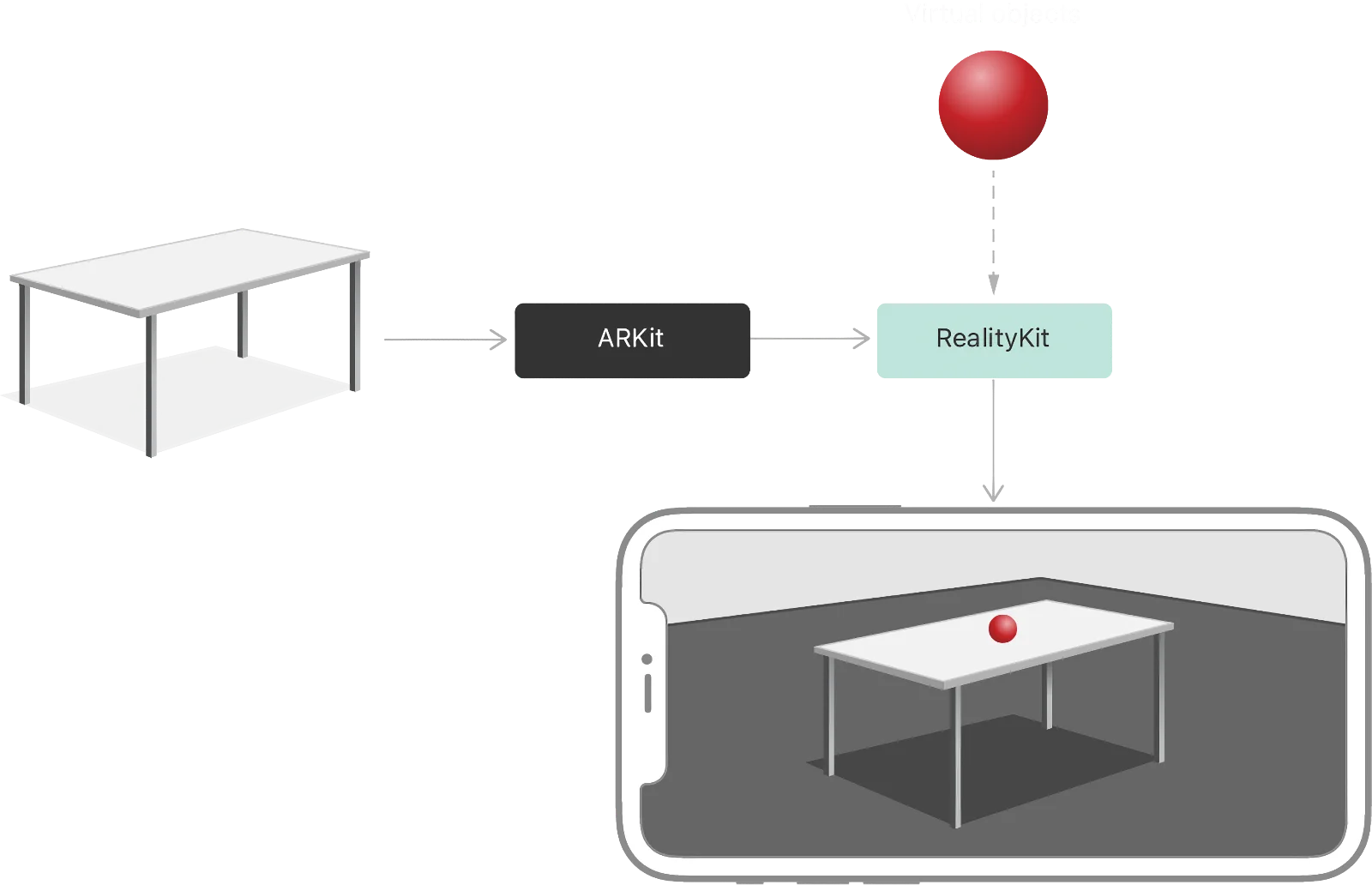

How you place virtual objects in visionOS. You use RealityKit to describe your object and use ARKit to place your object in the 'real' world. - ARKit: ARKit is Apple’s library to detect and places virtual objects through the camera viewfinder. ARKit was introduced in 2017 as a part of iOS 11, which was made for the iPhone X. The first Apple device with a LIDAR is the iPhone 12 which debuted in 2020. This gives you an idea of how long Apple develop and “teaches” developer about using AR

- RealityKit: RealityKit is Apple’s library to create and describe virtual objects. It works in conjunction with ARKit where you would create a virtual object using RealityKit and place the object using ARKit. RealityKit was introduced in WWDC 2019 for iOS 13, iPadOS 13, and macOS 10.15.

- SwiftUI: To easily build apps for macOS, iOS, and others, you use Apple’s SwiftUI library. Apple extended the library so you can build visionOS apps too. Furthermore, with system defaults in the library itself, your app can be deployed to various Apple devices and it will look consistent throughout.

- UIKit: Before SwiftUI, there was UIKit. Apple still maintained both libraries because UIKit contains more customization. SwiftUI and UIKit are meant to compliments each other instead of competing libraries.

Conclusion

Apple for one has been developing tools for the visionOS in plain sight for years before the product even arrives. This shows the long-term strategic thinking and moves that sets Apple apart from its rivals.

Since Apple has been releasing the tools and libraries that are necessary for app development years before the actual product, there will be a lot of familiarities for developers to create such apps. As the saying goes, “It’s easier to connect the dots looking back than forward”.

Plug

Support this free website by visiting my Amazon affiliate links. Any purchase you make will give me a cut without any extra cost to you

| Base | Pro | |

|---|---|---|

| iPhones | iPhone 16 / iPhone 16 Plus - (Amazon) | iPhone 17 Pro / iPhone 17 Pro Max - (Amazon) |

| iPhone Accessories | Find them at Amazon | |

| Watch | Apple Watch SE (Amazon) / Apple Watch Series 11 | Apple Watch Ultra 3 (Amazon) |

| AirPods | AirPods 4 (Amazon) | AirPods Pro 3 (Amazon) / AirPods Max (Amazon) |

| iPad | iPad 10 (Amazon) / iPad Mini (Amazon) | iPad Air M3 (Amazon) / iPad Pro M5 (Amazon) |

| Laptops | MacBook Air M3 (Amazon) | MacBook Pro M5 (Amazon) / MacBook Pro M4 Pro/ M4 Max (Amazon) |

| Desktop | Mac Mini M4 / M4 Pro (Amazon) / iMac M4 (Amazon) | Mac Studio / Mac Pro |

| Displays | Studio Display (Amazon) | Pro Display XDR (Amazon) |

Other Ecosystem Items