Apple M1 macs has shown that with only 8GB, it can out perform PCs with double or even quadruple the RAM size. The answer to the mystery of how this is possible is Apple stance to optimize the marriage of software and hardware to reach the common goal: process as much I/O as possible. However, there is a limit to all the optimization in large dataset, but for most common tasks a typical user will face, it is surprising what Apple M1 macs can do away with just 8GB of RAM.

All about the optimization

The key difference between Apple macOS and other players like Linux, Windows and to an extent Android OS is that Apple controls every aspect of the hardware that the OS runs on. The other players that does this is UNIX vendors like IBM’s AIX, HP’s HP-UX and DEC OpenVMS.

Because Apple controls everything that goes into Apple hardware, macOS development team only need to make macOS works on Apple hardware. This means that it can optimized the software to work with the hardware. Anything that is not needed, is not included.

The M1 macs take this to the next level where even the CPU, which usually the costliest and hardest part to be designed is made entirely in-house from Apple. This presents a great opportunity for Apple to design the macOS and the chip together from the ground up. From day 1 you have both the OS team and the CPU team work together to make the best solution.

Less is More.

One of the solutions is to have a shared RAM or Apple calls it Unified Memory which runs through their own I/O bus called Apple Fabric. So the Apple Fabric handles I/O for the on-board CPU, on-board CPU which has two modes: high-efficiency and high-performance, the Neural Engine, Secure Enclave and external I/O like their own storage and only support USB4 / TB 4. So streamlined requirements to meet I/O and processing goals.

Because they designed the M1 chip in the first place, they can do more optimization on the chip. Like traditionally, you will have discreet GPU and CPU having their own memory are near respective chips.

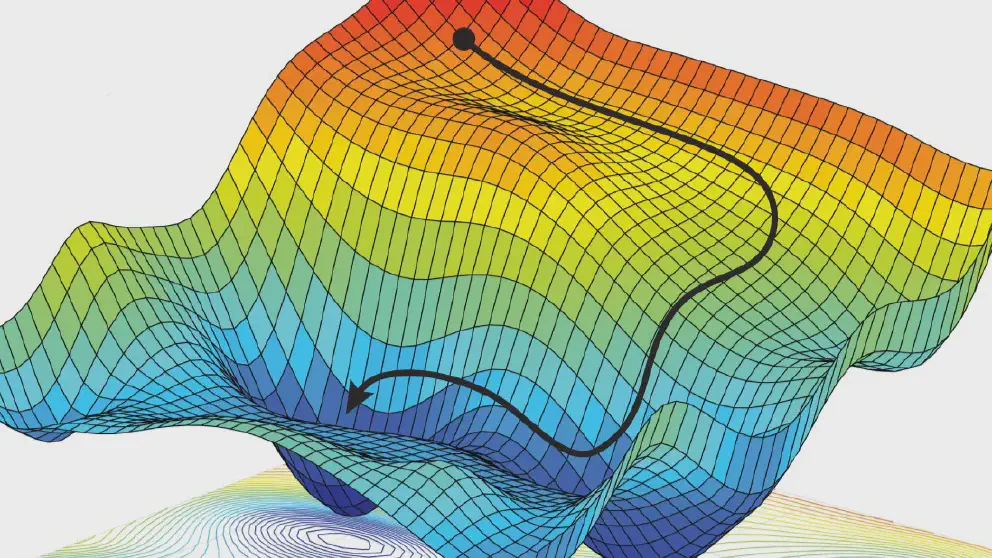

So you want to process some vector data to create a 3D scene, you would copy the data from storage, to the CPU memory, and then tell GPU to take this chunk of memory and process it. The GPU would then copy from CPU RAM to the GPU RAM and start process it and finally display it.

Now, with M1, macOS simply tell storage to put that vector data into RAM and ask the GPU to immediately processes it. No extra step to copy from CPU to GPU RAM. And later when it is done, straight up use Apple Fabric to display it. That’s another optimization.

This is the same reason why iPhone has less RAM than their Android counterparts but still maintain a performance lead. Android are designed to be flexible, but that flexibility comes at a price for needing higher requirements.

Limits of optimization

However, there are limits of optimizing the hardware to use less RAM. The datasets never change and if the data set is more than available RAM, you still need to swap from storage to RAM. There are stats that show a 16GB RAM M1 will do better for video encoding in 4K from 8K because the datasets is too big for 8GB of RAM.

Conclusion

The M1 is more efficient than Intel Macs because Apple build both the macOS and the CPU from the ground up and optimized to work with each other. Optimized means everything that is not needed is thrown out and unnecessary steps is cut. The chip has been designed to cut unnecessary steps and the OS has been designed to take advantage of the hardware.